Welcome to the Mathematical World!

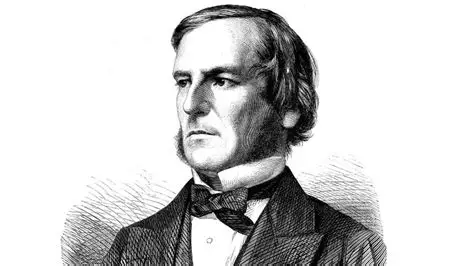

George Boole

Pioneer of Boolean Algebra and Symbolic Logic

George Boole (November 2, 1815 – December 8, 1864) was an English mathematician, logician, and educator whose creation of Boolean algebra became the cornerstone of the digital age. His work connected the realms of mathematics, logic, and philosophy, laying the foundation for modern computer science.

Early Life and Education

Born in Lincoln, England, Boole was largely self-taught, mastering Latin, Greek, mathematics, and science through his own efforts. He began teaching at age 16 to support his family and published his first mathematics paper in 1841.

Mathematical Logic and Boolean Algebra

In his 1847 pamphlet The Mathematical Analysis of Logic and his 1854 book An Investigation of the Laws of Thought, Boole introduced an algebraic system for logic. He represented logical statements with algebraic symbols, using operations analogous to addition, multiplication, and negation.

This system—now called Boolean algebra—describes the rules for combining truth values (true and false).

Boolean Algebra in LaTeX

Boole’s logic uses a binary set \( \{0, 1\} \), where \( 1 \) denotes "true" and \( 0 \) denotes "false". Common operations include:

- Conjunction (AND): \( a \land b \) \[ a \cdot b = \begin{cases} 1 & \text{if } a = 1 \text{ and } b = 1 \\ 0 & \text{otherwise} \end{cases} \]

- Disjunction (OR): \( a \lor b \) \[ a + b = \begin{cases} 1 & \text{if } a = 1 \text{ or } b = 1 \\ 0 & \text{otherwise} \end{cases} \]

- Negation (NOT): \( \lnot a \) \[ \overline{a} = \begin{cases} 1 & \text{if } a = 0 \\ 0 & \text{if } a = 1 \end{cases} \]

Impact on Modern Technology

Although Boole worked long before computers, his algebra underpins all modern digital circuits. Every logical gate in a computer—AND, OR, NOT—is a physical implementation of Boolean principles.

Academic Career and Honors

In 1849, Boole was appointed the first professor of mathematics at Queen’s College, Cork (now University College Cork) in Ireland. He was recognized by the Royal Society and became a respected figure in both mathematics and philosophy.

Death

In 1864, Boole died at age 49 from pneumonia, reportedly contracted after walking to a lecture in heavy rain and teaching in wet clothes.

Legacy

George Boole’s work bridged human reasoning and mathematics, enabling the design of modern digital computers and programming languages. His name lives on in the term Boolean, a tribute to his profound influence on logic, mathematics, and computer science.